Advanced Research on Usable Security and Privacy

Overview:

If you want to share your feedback, have a question or research idea, or want to collaborate, let’s get in touch!

Articles:

A Model of Contextual Factors Affecting Older Adults’ Information-Sharing Decisions in the US

Frik, A., Bernd, J., Egelman, S. (2022, August). A Model of Contextual Factors Affecting Older Adults’ Information-Sharing Decisions in the US. In ACM Transactions on Computer-Human Interaction.

The sharing of information between older adults and their friends, families, caregivers, and doctors promotes a collaborative approach to managing their emotional, mental, and physical well-being and health, prolonging independent living and improving care quality and quality of life in general. However, information flow in collaborative systems is complex, not always transparent to elderly users, and may raise privacy and security concerns. Because older adults’ decisions about whether to engage in information exchange affects interpersonal communications and delivery of care, it is important to understand the factors and context that influence those decisions. Our work contributes empirical evidence and suggests a systematic approach. In this paper, we present the results of semi-structured interviews with 46 older adults age 65+ about their views on information collection, transmission, and sharing. We develop a detailed model of the contextual factors that combine in complex ways to affect older adults’ decision-making about information sharing. We discuss how our comprehensive model compares to existing frameworks for analyzing information sharing expectations and preferences. Finally, we suggest directions for future research and describe practical implications of our model for the design and evaluation of collaborative information-sharing systems, as well as for policy and consumer protection.

Users’ Expectations About and Use of Smartphone Privacy and Security Settings

Frik, A., Kim, J., Sanchez, J. R., & Ma, J. (2022, April). Users’ Expectations About and Use of Smartphone Privacy and Security Settings. In CHI Conference on Human Factors in Computing Systems (pp. 1-24).

With the growing smartphone penetration rate, smartphone settings remain one of the main models for information privacy and security controls. Yet, their usability is largely understudied, especially with respect to the usability impact on underrepresented socio-economic and low-tech groups. In an online survey with 178 users, we find that many people are not aware of smartphone privacy and security settings, their defaults, and have not configured them in the past, but are willing to do it in the future. Some participants perceive low self-efficacy and expect difficulties and usability issues with configuring those settings. Finally, we find that certain socio-demographic groups are more vulnerable to risks and feel less prepared to use smartphone settings to protect their online privacy and security.

Deciding on Personalized Ads: Nudging Developers About User Privacy

Tahaei, M., Frik, A., Vaniea, K. E. (2021, August). Deciding on Personalized Ads: Nudging Developers About User Privacy. In Seventeenth Symposium on Usable Privacy and Security (SOUPS’21).

Mobile advertising networks present personalized advertisements to developers as a way to increase revenue. These types of ads use data about users to select potentially more relevant content. However, choice framing also impacts app developers’ decisions which in turn impacts their users’ privacy. Currently, ad networks provide choices in developer-facing dashboards that control the types of information collected by the ad network as well as how users will be asked for consent. Framing and nudging have been shown to impact users’ choices about privacy, we anticipate that they have a similar impact on choices made by developers.

We conducted a survey-based online experiment with 400 participants with experience in mobile app development. Across six conditions, we varied the choice framing of options around ad personalization. Participants in the condition where privacy consequences of ads personalization are highlighted in the options are significantly (11.06 times) more likely to choose non-personalized ads compared to participants in the Control condition with no information about privacy. Participants’ choice of ad type is driven by impact on revenue, user privacy, and relevance to users. Our findings suggest that developers are impacted by interfaces and need transparent options.

Evaluating and Redefining Smartphone Permissions with Contextualized Justifications for Mobile Augmented Reality Apps

Harborth, D., & Frik, A. (2021). Evaluating and Redefining Smartphone Permissions with Contextualized Justifications for Mobile Augmented Reality Apps. In Seventeenth Symposium on Usable Privacy and Security (SOUPS’21) (pp. 513-534).

Augmented reality (AR), and specifically mobile augmented reality (MAR) gained much public attention after the success of Pokémon Go in 2016, and since then has found applica- tion in online games, social media, entertainment, real estate, interior design, and other services. MAR apps are highly dependent on real time context-specific information provided by the different sensors and data processing capabilities of smartphones (e.g., LiDAR, gyroscope or object recognition). This dependency raises crucial privacy issues for end users. We evaluate whether the existing access permission systems, initially developed for non-AR apps, as well as proposed new permissions, relevant for MAR apps, provide sufficient and clear information to the users.

We address this research goal in two online survey-based experiments with a total of 581 participants. Based on our results, we argue that it is necessary to increase transparency about MAR apps’ data practices by requesting users’ permissions to access certain novel and privacy invasive resources and functionalities commonly used in MAR apps, such as speech and face recognition. We also find that adding justifications, contextualized to the data collection practices of the app, improves transparency and can mitigate privacy concerns, at least in the context of data utilized to the users’ benefit. Better understanding of the app’s practices and lower concerns, in turn, increase the intentions to grant permissions. We provide recommendations for better transparency in MAR apps.

Privacy Champions in Software Teams: Understanding Their Motivations, Strategies, and Challenges

Tahaei, M., Frik, A., Vaniea K. (2021). Privacy Champions in Software Teams: Understanding Their Motivations, Strategies, and Challenges. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI’21).

Software development teams are responsible for making and implementing software design decisions that directly impact end-user privacy, a challenging task to do well. Privacy Champions—people who strongly care about advocating privacy—play a useful role in supporting privacy-respecting development cultures. To understand their motivations, challenges, and strategies for protecting end-user privacy, we conducted 12 interviews with Privacy Champions in software development teams. We find that common barriers to implementing privacy in software design include: negative privacy culture, internal prioritisation tensions, limited tool support, unclear evaluation metrics, and technical complexity. To promote privacy, Privacy Champions regularly use informal discussions, management support, communication among stakeholders, and documentation and guidelines. They perceive code reviews and practical training as more instructive than general privacy awareness and on-boarding training. Our study is a first step towards understanding how Privacy Champions work to improve their organisation’s privacy approaches and improve the privacy of end-user products.

Privacy and Security Threat Models and Mitigation Strategies of Older Adults

Frik, A., Nurgalieva, L., Bernd, J., Lee, J., Schaub, F., & Egelman, S. (2019). Privacy and security threat models and mitigation strategies of older adults. In Fifteenth Symposium on Usable Privacy and Security (SOUPS’19).

Older adults (65+) are becoming primary users of emerging smart systems, especially in health care. However, these technologies are often not designed for older users and can pose serious privacy and security concerns due to their novelty, complexity, and propensity to collect and communicate vast amounts of sensitive information. Efforts to address such concerns must build on an in-depth understanding of older adults’ perceptions and preferences about data privacy and security for these technologies, and accounting for variance in physical and cognitive abilities. In semi-structured interviews with 46 older adults, we identified a range of complex privacy and security attitudes and needs specific to this population, along with common threat models, misconceptions, and mitigation strategies. Our work adds depth to current models of how older adults’ limited technical knowledge, experience, and age-related declines in ability amplify vulnerability to certain risks; we found that health, living situation, and finances play a notable role as well. We also found that older adults often experience usability issues or technical uncertainties in mitigating those risks—and that managing privacy and security concerns frequently consists of limiting or avoiding technology use. We recommend educational approaches and usable technical protections that build on seniors’ preferences.

A Promise Is A Promise: The Effect Of Commitment Devices On Computer Security Intentions

Frik, A., Malkin, N., Harbach, M., Peer, E., & Egelman, S. (2019, April). A Promise Is A Promise: The Effect of Commitment Devices on Computer Security Intentions. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 604). ACM.

Commitment devices are a technique from behavioral economics that have been shown to mitigate the effects of present bias—the tendency to discount future risks and gains in favor of immediate gratifications. In this paper, we explore the feasibility of using commitment devices to nudge users towards complying with varying online security mitigations. Using two online experiments, with over 1,000 participants total, we offered participants the option to be reminded or to schedule security tasks in the future. We find that both reminders and commitment nudges can increase users’ intentions to install security updates and enable two-factor authentication, but not to configure automatic backups. Using qualitative data, we gain insights into the reasons for postponement and how to improve future nudges. We posit that current nudges may not live up to their full potential, as the timing options offered to users may be too rigid.

The Impact of Ad-Blockers on Product Search and Purchase Behavior: A Lab Experiment

Frik, A., Haviland, A., & Acquisti, A. (2020). The Impact of Ad-Blockers on Product Search and Purchase Behavior: A Lab Experiment. 29th USENIX Security Symposium (USENIX Security 20).

Ad-blocking applications have become increasingly popular among Internet users. Ad-blockers offer various privacy- and security-enhancing features: they can reduce personal data collection and exposure to malicious advertising, help safe-guard users’ decision-making autonomy, reduce users’ costs (by increasing the speed of page loading), and improve the browsing experience (by reducing visual clutter). On the other hand, the online advertising industry has claimed that ads increase consumers’ economic welfare by helping them find better, cheaper deals faster. If so, using ad-blockers would deprive consumers of these benefits. However, little is known about the actual economic impact of ad-blockers.

We designed a lab experiment (N=212) with real economic incentives to understand the impact of ad-blockers on consumers’ product searching and purchasing behavior, and the resulting consumer outcomes. We focus on the effects of blocking contextual ads (ads targeted to individual, potentially sensitive, contexts, such as search queries in a search engine or the content of web pages) on how participants searched for and purchased various products online, and the resulting consumer welfare.

We find that blocking contextual ads did not have a statistically significant effect on the prices of products participants chose to purchase, the time they spent searching for them, or how satisfied they were with the chosen products, prices, and perceived quality. Hence we do not reject the null hypothesis that consumer behavior and outcomes stay constant when such ads are blocked or shown. We conclude that the use of ad-blockers does not seem to compromise consumer economic welfare (along the metrics captured in the experiment) in exchange for privacy and security benefits. We discuss the implications of this work in terms of end-users’ privacy, the study’s limitations, and future work to extend these results.

A Qualitative Model of Older Adults’ Contextual Decision-Making About Information Sharing

Frik A., J. Bernd, N. Alomar, S. Egelman. 2020. A Qualitative Model of Older Adults’ Contextual Decision-Making About Information Sharing. The 2020 Workshop on Economics of Information Security (WEIS’20).

The sharing of information between older adults and their friends, families, caregivers, and doctors promotes a collaborative approach to managing their emotional, mental, and physical well-being and health, prolonging independent living and improving care quality and quality of life in general. However, information flow in collaborative systems is complex, not always transparent to elderly users, and may raise privacy and security concerns. Because older adults’ decisions about whether to engage in information exchange affects interpersonal communications and delivery of care, it is important to understand the factors that influence those decisions. While a body of existing literature has explored the information sharing expectations and preferences of the general population, specific research on the perspectives of older adults is less comprehensive. Our work contributes empirical evidence and suggests a systematic approach. In this paper, we present the results of semi-structured interviews with 46 older adults age 65+ about their views on information collection, transmission, and sharing using traditional ICT and emerging technologies (such as smart speakers, wearable health trackers, etc.). Based on analysis of this qualitative data, we develop a detailed model of the contextual factors that combine in complex ways to affect older adults’ decision-making about information sharing. We also discuss how our comprehensive model compares to existing frameworks for analyzing information sharing expectations and preferences. Finally, we suggest directions for future research and describe practical implications of our model for the design and evaluation of collaborative information-sharing systems, as well as for policy and consumer protection.

Investigating Users’ Preferences and Expectations for Always-Listening Voice Assistants

Tabassum, M., Kosiński, T., Frik, A., Malkin, N., Wijesekera, P., Egelman, S., & Lipford, H. R. (2019). Investigating Users’ Preferences and Expectations for Always-Listening Voice Assistants. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 3(4), 153.

Many consumers now rely on different forms of voice assistants, both stand-alone devices and those built into smartphones. Currently, these systems react to specific wake-words, such as Alexa, Siri, or ‘Ok, Google.’ However, with advancements in natural language processing, the next generation of voice assistants could instead always listen to the acoustic environment and proactively provide services and recommendations based on conversations without being explicitly invoked. We refer to such devices as ‘always listening voice assistants’ and explore expectations around their potential use. In this paper, we report on a 178-participant survey investigating the potential services people anticipate from such a device and how they feel about sharing their data for these purposes. Our findings reveal that participants can anticipate a wide range of services pertaining to a conversation; however, most of the services are very similar to those that existing voice assistants currently provide with explicit commands. Participants are more likely to consent to share a conversation when they do not find it sensitive, they are comfortable with the service and find it beneficial, and when they already own a stand-alone voice assistant. Based on our findings we discuss the privacy challenges in designing an always-listening voice assistant.

Nudge Me Right: Personalizing Online Nudges to People’s Decision-Making Styles

Peer, E., Egelman, S., Harbach, M., Malkin, N., Mathur, A., & Frik, A. (2020). Nudge Me Right: Personalizing Online Security Nudges to People’s Decision-Making Styles. Computers in Human Behavior, vol. 109, 106347. https://doi.org/10.1016/j.chb.2020.106347

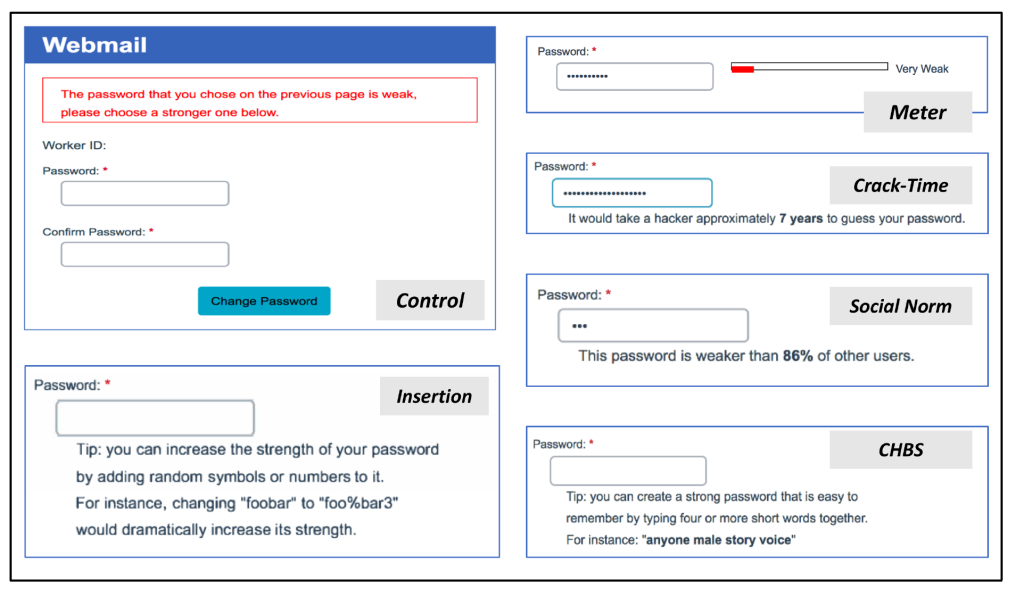

Nudges are simple and effective means to help people make decisions that could benefit themselves or society. However, effects of nudges are limited to local maxima, as they are almost always designed with the “average” person in mind, instead of being customized to different individuals. Such “nudge personalization” has been advocated before, but its actual potency and feasibility has never been systematically investigated. Using the ubiquitous domain of online password nudges as our testbed, we present a novel approach that utilizes people’s decision-making style to personalize the online nudge they receive. In two large-scale studies, we show how and when personalized nudges can lead to considerably stronger and more secure passwords, compared to administering “one-size-fits-all” nudges. We discuss the implications of these findings and how more efforts by researchers and policy-makers should and could be made to guarantee that each individual is nudged in a way most right for them.

A Measure Of The Implicit Value Of Privacy Under Risk

Frik, A. and Gaudeul, A. (2020), “A measure of the implicit value of privacy under risk”, Journal of Consumer Marketing, Vol. 37 No. 4, pp. 457-472. https://doi.org/10.1108/JCM-06-2019-3286

Many online transactions and digital services depend on consumers’ willingness to take privacy risks, such as when shopping online, joining social networks, using online banking, or interacting with e-health platforms. Their decisions depend not only on how much they would suffer if their data were revealed, but also on how uncomfortable they feel about taking such a risk. Such aversion to risk is a neglected factor when evaluating the value of privacy. We propose an empirical method to measure both privacy risk aversion and privacy worth and how those affect privacy decisions.

In our experiment, we let individual play privacy lotteries and derive a measure of the value of privacy under risk (VPR). We empirically tests the validity of this measure in a laboratory experiment with 148 participants. Individuals were asked to make a series of incentivized decisions on whether to incur the risk of revealing private information to other participants.

Findings: The results confirm that the willingness to incur a privacy risk is driven by a complex array of factors including risk aversion, self-reported value for private information, and general attitudes to privacy (derived from surveys). The value of privacy under risk does not depend on whether there is a preexisting threat to privacy. We find qualified support for the existence of an order effect, whereby presenting financial choices prior to privacy ones leads to less concern for privacy.

Value: We present a novel method to measure the value of privacy under risk that takes account of both the value of private information to consumers and their tolerance for privacy risks. We explain how this method can be used more generally to elicit attitudes to a wide range of privacy risks involving exposure of various types of private information.

Practical implications: Attitude to risk in the domain of privacy decisions is largely understudied. In this paper we take a first step towards closing this empirical and methodological gap: we offer (and validate) a method for the incentivized elicitation of the implicit value of privacy under risk and propose a robust and meaningful monetary measure of the level of aversion to privacy risks. This measure is a crucial step in designing and implementing the practical strategies for evaluating privacy as a competitive advantage, and designing markets for privacy risk regulations (e.g. through cyber insurances).

Social implications: Our study advances research on the economics of consumer privacy—one of the most controversial topics in the digital age. In light of the proliferation of privacy regulations (including recent EU GDPR, and California Consumer Privacy Act), our method for measuring the value of privacy under risk provides an important instrument for policy-makers’ informed decisions regarding what tradeoffs consumers consider beneficial and fair, and where to draw the line for violations of consumers’ expectations, preferences and welfare.

Information Design in An Aged Care Context: Views of Older Adults on Information Sharing in a Care Triad

Nurgalieva, L., Frik, A., Ceschel, F., Egelman, S., & Marchese, M. (2019, May). Information Design in An Aged Care Context: Views of Older Adults on Information Sharing in a Care Triad. In Proceedings of the 13th EAI International Conference on Pervasive Computing Technologies for Healthcare (pp. 101-110). ACM.

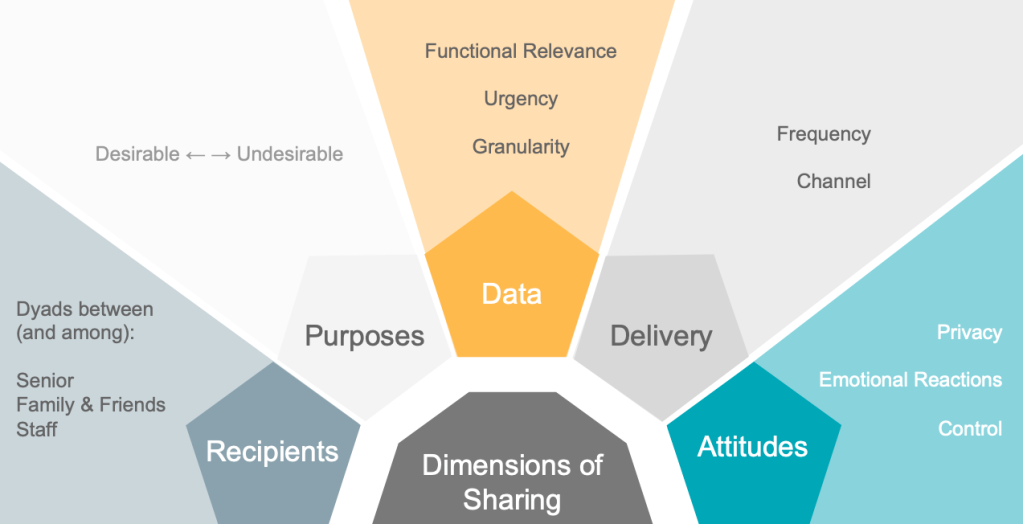

The adoption of technological solutions for aged care is rapidly increasing in developed countries. New technologies facilitate the sharing of health information among the “care triad”: the elderly care recipient, their family, and care staff. In order to develop user centered technologies for this population, we believe that it is necessary to first examine their views about the sharing of health and well-being information (HWBI). Through in-depth semi-structured interviews with 12 residents of senior care facilities, we examined the reasons why older adults choose to share or not to share their HWBI with those involved in their care. We examine how the purpose of use, functional relevance, urgency, anticipated emotional reactions, and individual attitudes to privacy and control affect their opinions about sharing. We then explore how those factors define what granularity of data, communication frequency and channel older adults find appropriate for sharing HWBI with various recipients. Based on our findings, we suggest design implications.

Perceived Trust in Websites’s Privacy Practices Affects Consumers’ Purchase Intentions

Frik, A., & Mittone, L. (2019). Factors Influencing the Perception of Website Privacy Trustworthiness and Users’ Purchasing Intentions: The Behavioral Economics Perspective. Journal of Theoretical and Applied Electronic Commerce Research, 14(3), 89-125.

In this study, we identified the factors that influence consumer purchasing intentions and their perceptions of the trustworthiness of the privacy-related practices of e-commerce websites. We produced a list of website attributes that represent these factors in a series of focus groups. Then we constructed and validated a research model from an online survey of 117 adult participants. We found that security, privacy (including awareness, information collection, and control), and reputation (including company background and consumer reviews) have a strong effect on trust and willingness to purchase, while website quality plays only a marginal role. Although the perception of trustworthiness and purchasing intention were positively correlated, in some cases participants were more willing to buy from a website that they judged as untrustworthy with regard to privacy. We investigated how behavioral biases and decision-making heuristics may explain the discrepancy between perception and behavioral intention. Finally, we determined which website attributes and individual characteristics impact customer’s trust and willingness to buy.